Blogs

The latest cybersecurity trends, best practices, security vulnerabilities, and more

Open-Source Intelligence to Understand the Scope of N-Day Vulnerabilities

By Charles McFarland · September 21, 2022

The zero-day is the holy grail for cybercriminals; However, N-day vulnerabilities can pose problems even years after discovery. If a target is vulnerable, it doesn’t matter whether the vulnerability is unknown or has been known for decades. A bad actor can still use it for nefarious purposes. N-days continuously find their way into new projects as well perpetuating their danger. Unfortunately, open-source software (OSS) can also suffer from unpatched N-days. This is due in part to re-use of code and lack of resources from maintainers. Unlike closed-source software, open-source software repositories are available online providing an avenue for mass reconnaissance. To understand how big the N-day problem can become, let us use our Python tarfile research here and here as a case study.

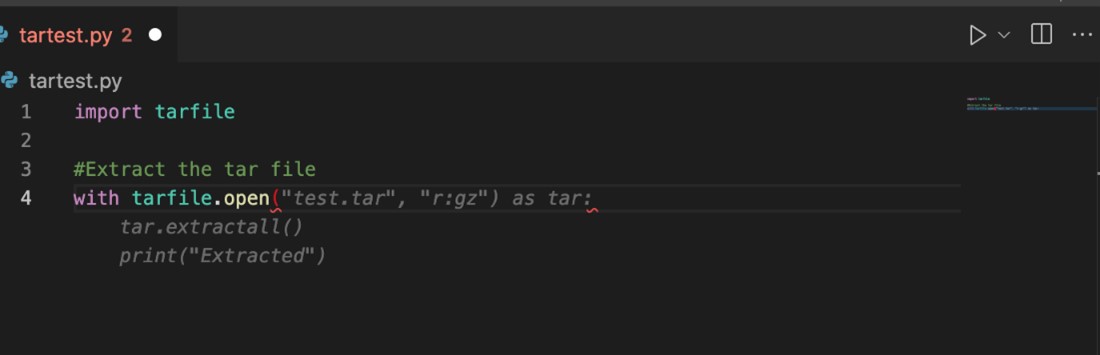

Earlier this year Kasimir Schulz discovered a directory traversal vulnerability during his research for another project. He noted the ease of which a developer could inadvertently include the vulnerability in their own code. Upon further analysis, he determined that this was the result of CVE-2007-4559, a 15-year-old N-day vulnerability in the Python tarfile package disclosed in 2007. What caught his attention is the simplicity of the issue. The actual vulnerability arises from two or three lines of code using un-sanitized tarfile.extract() or the built-in defaults of tarfile.extractall().

myfile.extractall()

Failure to write any safety code to sanitize the members files before calling tarfile.extract() or tarfile.extractall() results in a directory traversal vulnerability, enabling a bad actor access to the file system. Surely, this was a one off.

Not only has this vulnerability been known for over a decade, the official Python docs explicitly warn to “Never extract archives from untrusted sources without prior inspection” due to the directory traversal issue. However, we wanted to make sure. We already know the official documentation warns of this issue, but many developers reach out to search engines and web sites such as Stack Overflow for help. After using search engines on “How to extract tarfile in Python” we found a concerning lack of discussion about the dangers of extract() or extractall(). It was not until specifically searching for “Securely extracting tarfile in Python” we found a meaningful discussion on Stack Overflow. This didn’t bode well, so we decided to look at live code to see how prevalent the issue is.

Since closed-source software is generally much more difficult to both acquire and inspect, we decided to investigate OSS as an alternative. A good place to start looking for OSS repositories is GitHub, owned by Microsoft. It has over 83 million developers and more than 200 million public repositories. The first thing we needed to do is scope out the potential problem. We start by finding all the Python projects using tarfile. Simply searching for tarfile gives 1 million results, many of which don’t appear to be relevant. We need a more advanced search which luckily GitHub provides. Using the advanced search, we narrowed the scope down to just over 300,000 instances with the following search string:

"Import tarfile" language:Python

“Import tarfile” must be used to use the tarfile class, so it’s a good indicator on whether a project is managing tar files in their code. Using “extract()” or “extractall()” would result in too many false positives from other libraries and using “tarfile.extract()” or “tarfile.extractall()” will have the opposite effect of too many false negatives. “Import tarfile” was a compromise to ensure we collected all positives and reduced false positives. language:Python further improves this by ensuring only Python files are being considered and not others such as text documents which only may be referencing tarfile. This doesn’t mean 300k+ are vulnerable, this is simply the Python files using the tarfile package. Now our task is to look for uses of tarfile.extract() or tarfile.extractall() which do not have proper sanitization of the tar members. This isn’t something advanced search can do so we reverted to the basic statistical method of sampling the repos. The way we approached this was to scrape the names of the repositories from our search and run a Bash script to clone each of them for local processing.

for str in ${arr[@]}; do

git clone https://github.com/$str.git

done

The variable arr contains a list of all the names we scraped, 257 in total which will allow us to statistically measure the larger population. This small script downloads each repository to the local drive so we could more easily automate analysis. First, we manually looked through 175 of these repos to see if they included “extract()” or “extractall()” and then whether they did any cleanup of the tarfile members beforehand. What we found was 61% of them did not, leaving those repositories vulnerable and possibly exploitable. To cover all our samples, we created a Python tool called Creosote and ran it across all 257 of the repositories we downloaded. Creosote essentially checks to see if the programmer called extractall() immediately after a File Open or whether they extract individual files without any sanitization. Much like our manual efforts, over half were vulnerable or 65% to be exact. Unfortunately, the previous find was not a one off but an indicator of a much larger problem. If our sample correlates well to the greater population then there are just under 200,000 instances of this vulnerability sitting on GitHub. However, we wanted a better and more complete estimate. We decided to reach out to GitHub to assist in our search. With GitHub’s help we were able to get a much larger dataset to include 588,840 unique repositories that include ‘import tarfile’ in its python code. Using our manually calculated 61% vulnerability rate we now estimate over 350,000 vulnerable repositories.

This large amount of vulnerable code has shown some unfortunate side effects. Beyond the code itself, new tools are being created to help programmers speed up their craft. GitHub Copilot is one such tool that uses machine learning to analyze code on GitHub to create a form of “auto-complete” for programmers. There is a common saying also popular in the data science community, “Garbage in garbage out”. With 300,000 erroneous instances of tarfile.extract() or tarfile.extractall(), these machine learning tools are learning to do things insecurely. Not from any fault of the tool but from the fact that it learned from everyone else. Look at Figure 1 that shows GitHub Copilot 'auto-completing' tar.extractall(). Notice how the grey suggestion text writes a valid extraction procedure but fails to sanitize the tarfile before-hand.

If we are to keep ourselves secure, someone must do something about this. It might as well begin us. With 588,840 repositories to analyze and over 300,000 to patch we needed to build some new automation tools. Using a combination of Python, Bash, Creosote, and the GitHub API, we’ve automated much of the process to include:

- Mass Repository Forking

- Mass Repository Cloning

- Code Analysis

- Code Patching

- Code Commits

- Pull Requests

Using our tools, we currently have patches for 11,005 repositories, ready for pull requests. Each patch will be added to a forked repository and a pull request made over time. This will help individuals and organizations alike become aware of the problem and give them a one click fix. Due to the size of vulnerable projects we expect to continue this process over the next few weeks. This is expected to hit 12.06% of all vulnerable projects, a little over 70K projects by the time of completion. It’s a large number but by no means 100% and all the Pull Request must be accepted by the project maintainer. As an industry we still have a lot of work to do.

While we will fix as many repositories as possible, we cannot solve the overall problem. The number of vulnerable repositories we found beg the question, which other N-day vulnerabilities are lurking around in OSS, undetected or ignored for years? The tarfile directory traversal vulnerability has not only been known since 2007 it is explicitly warned against in the official documentation. Even so, 61% of all our samples are implementing it insecurely. While we have done much of the heavy lifting for this particular vulnerability the real solution is to tackle the root of the problem. That is, diligent security assessments of open-source code and timely patching. N-days should be measured in days, not years. We need to ensure we are doing our due diligence to audit OSS and not leave vulnerable code in the wild to be exploited. If this tarfile vulnerability is any indicator, we are woefully behind and need to increase our efforts to ensure OSS is secure.

RECENT NEWS

-

Jun 17, 2025

Trellix Accelerates Organizational Cyber Resilience with Deepened AWS Integrations

-

Jun 10, 2025

Trellix Finds Threat Intelligence Gap Calls for Proactive Cybersecurity Strategy Implementation

-

May 12, 2025

CRN Recognizes Trellix Partner Program with 2025 Women of the Channel List

-

Apr 29, 2025

Trellix Details Surge in Cyber Activity Targeting United States, Telecom

-

Apr 29, 2025

Trellix Advances Intelligent Data Security to Combat Insider Threats and Enable Compliance

RECENT STORIES

Latest from our newsroom

Get the latest

Stay up to date with the latest cybersecurity trends, best practices, security vulnerabilities, and so much more.

Zero spam. Unsubscribe at any time.